AI / Tech

Oxford spinout RADiCAIT uses AI to make diagnostic imaging more affordable and accessible — catch it at TechCrunch Disrupt 2025

If you’ve ever had a PET scan, you know it’s an ordeal. The scans help doctors detect cancer and track its spread, but the process itself is a logistical nightmare for patients.

It starts with fasting for four to six hours before coming into the hospital — and good luck to you if you live rurally and your local hospital doesn’t have a PET scanner. When you get to the hospital, you’re injected with radioactive material, after which you must wait an hour while it washes through your body. Next, you enter the PET scanner and have to attempt to lie still for 30 minutes while radiologists acquire the image. After that, you have to keep physically away from the elderly, young people, and pregnant women for up to 12 hours because you’re literally semi-radioactive.

Another bottleneck? PET scanners are concentrated in major cities because their radioactive tracers must be produced in nearby cyclotrons — compact nuclear machines — and used within hours, limiting access in rural and regional hospitals.

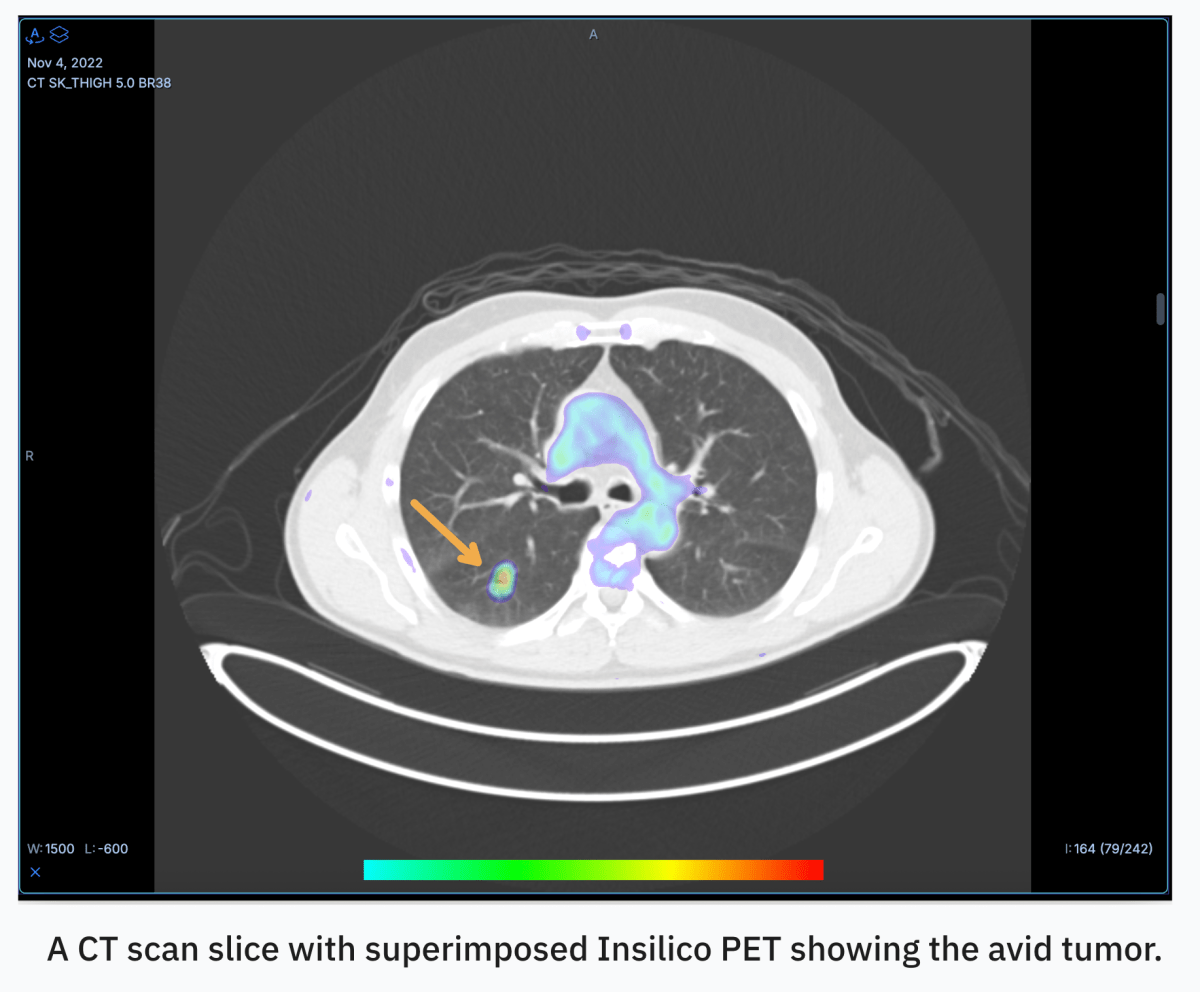

But what if you could use AI to convert CT scans, which are much more accessible and affordable, into PET scans? That’s the pitch of RADiCAIT, an Oxford spinout that came out of stealth this month with $1.7 million in pre-seed financing. The Boston-based startup, which is a Top 20 finalist in Startup Battlefield at TechCrunch Disrupt 2025, has just opened a $5 million raise to advance its clinical trials.

“What we really do is we took the most constrained, complex, and costly medical imaging solution in radiology, and we supplanted it with what is the most accessible, simple and affordable, which is CT,” Sean Walsh, RADiCAIT’s CEO, told TechCrunch.

RADiCAIT’s secret sauce is its foundational model — a generative deep neural network invented in 2021 at the University of Oxford by a team led by the startup’s co-founder and chief medical information officer, Regent Lee.

The model learns by comparing CT and PET scans, mapping them, and picking out patterns in how they relate to each other. Sina Shahandeh, RADiCAIT’s chief technologist, describes it as connecting “distinct physical phenomena” by translating anatomical structure into physiological function. Then the model is directed to pay extra attention to specific features or aspects of the scans, like certain types of tissue or abnormalities. This focused learning is repeated many times with many different examples, so the model can identify which patterns are clinically important.

Techcrunch event

San Francisco

|

October 27-29, 2025

The final image that goes to doctors for review is created by combining multiple models working together. Shahandeh compares the approach to Google DeepMind’s AlphaFold, the AI that revolutionized protein structure prediction: Both systems learn to translate one type of biological information into another.

Walsh claims the team at RADiCAIT can mathematically prove that their synthetic or generated PET images are statistically similar to real chemical PET scans.

“That’s what our trials show,” he said, “that the same quality of decision has been made when the doctor, radiologist, or oncologist is given a chemical PET or [our AI-generated PET].”

RADiCAIT doesn’t promise to replace the need for PET scans in specific therapeutic settings, like radioligand therapy, which kills cancer cells. But for diagnostic, staging, and monitoring purposes, RADiCAIT’s technology might make PET scans obsolete.

“Because it’s such a constrained system, there’s not enough supply to meet demand for diagnostics and theragnostics,” Walsh said, referring to a medical approach that combines diagnostic imaging (i.e., PET scans) with targeted therapy to treat diseases (i.e., cancer). “So what we’re looking to do is soak up that demand on the diagnostic side. PET scanners themselves should pick up the slack on the theragnostic side.”

RADiCAIT has already begun clinical pilots specifically for lung cancer testing with major health systems like Mass General Brigham and UCSF Health. The startup is now pursuing an FDA clinical trial — a more expensive and involved process that’s driving RADiCAIT’s $5 million seed round. Once that’s approved, the next step will be to do commercial pilots and demonstrate the commercial viability of the product. RADiCAIT also wants to run the same process — clinical pilots, clinical trials, commercial pilots — for colorectal and lymphoma use cases.

Shahandeh said RADiCAIT’s approach to using AI to yield valid insights without the burdens of difficult and expensive tests is “broadly applicable.”

“We’re exploring extensions across radiology,” Shahandeh added. “Expect to see similar innovations linking domains from materials science to biology, chemistry, and physics wherever nature’s hidden relationships can be learned.”

If you want to hear more about RADiCAIT join us at Disrupt, October 27 to 29 in San Francisco. Learn more here.